本文目录

显示

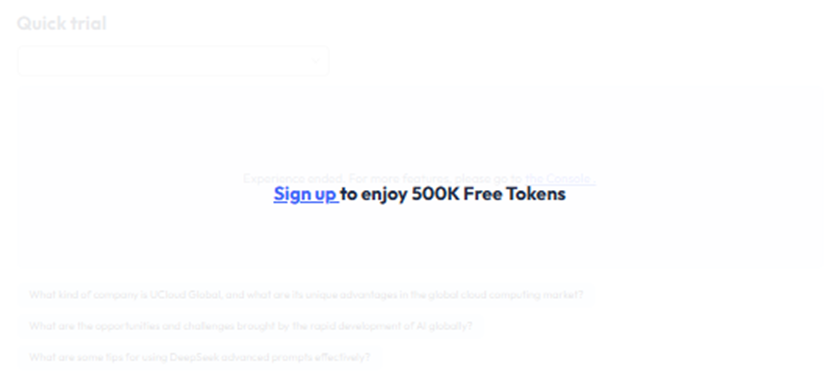

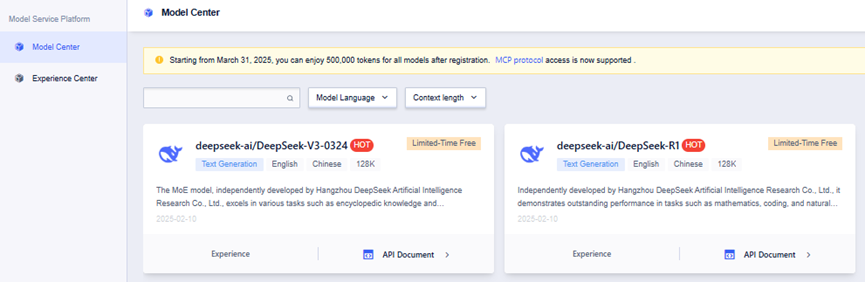

UCloud-Global.com是UCloud国际站站点,目前上线了DeepSeek R1&V3 API页面,此功能依托于UCloud UModelVerse 模型市场产品,其支持在线使用 DeepSeek R1 和 V3 模型,并且允许通过 API 调用。仅需 5 分钟即可获取 DeepSeek R1 和 V3 的 API,限时免费开放,当前注册即享50万免费Tokens。

DeepSeek R1&V3 API页面入口:https://www.ucloud-global.com/en/product/UModelVerse

API优势介绍

完善的API接口:UModelVerse提供671B全参数的DeepSeek-R1和DeepSeek-V3模型API,支持灵活调用。

一键接入:DeepSeek支持快速部署,几分钟内即可上线,轻松搭建企业级知识库。

低延迟体验:低延迟网络确保流畅使用,满足全球范围内的应用需求。

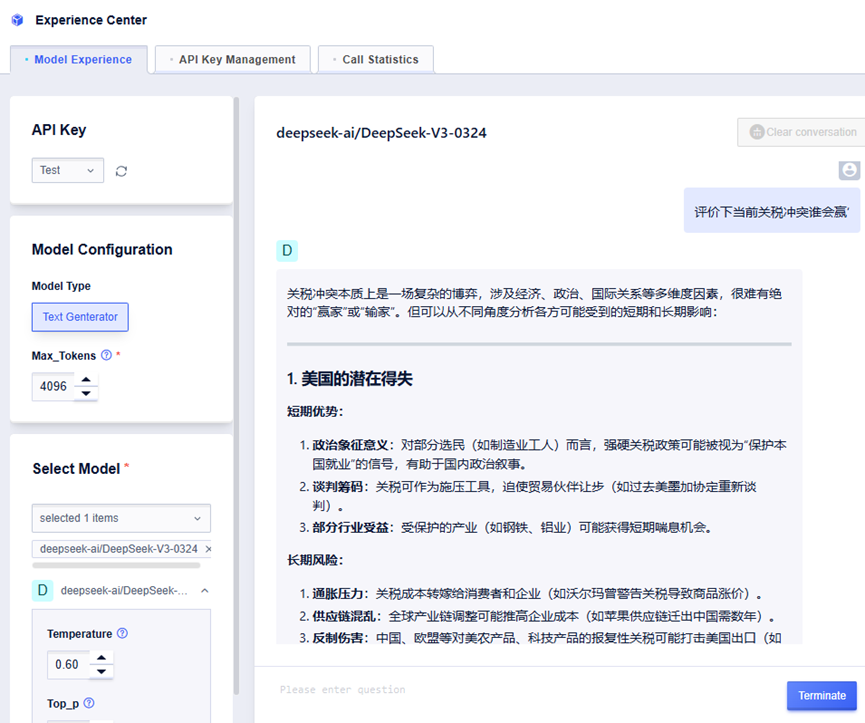

DeepSeek前台体验

邮箱或谷歌账户注册并登录后,可在页面内体验3次对话,满3次后前往控制台继续体验或对接API

前台界面

控制台界面及体验

API接入步骤

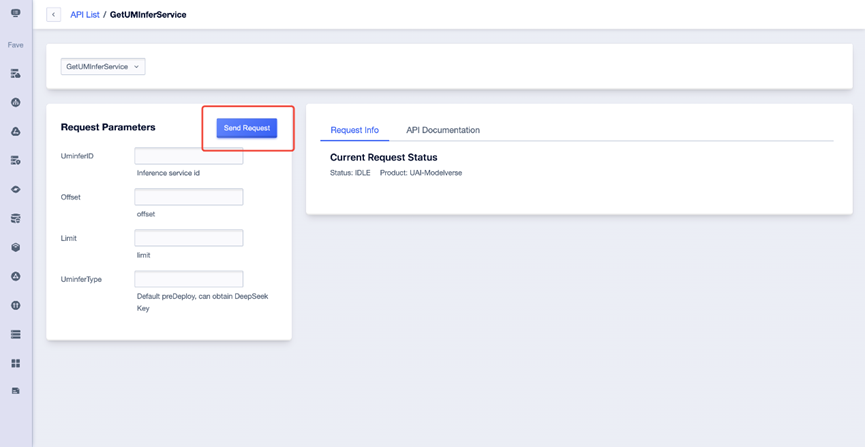

第一步:获取API Key

打开【API列表】页面,无需填写参数,点击“发送请求”即可。

在弹出的窗口中点击“确认发送请求”。

从返回的列表中,根据模型名称选择所需的Key。

第二步:聊天API调用

请求

请求头字段

| 名称 | 类型 | 必填 | 描述 |

| Content-Type | string | 是 | 固定值:application/json |

| Authorization | string | 是 | 输入在第一步中获得的Key |

请求参数

| 名称 | 类型 | 必填 | 描述 |

| model | string | Yes | Model ID |

| messages | List[message] | Yes | Chat context information. Instructions: (1) The messages members cannot be empty, one member indicates a single round of conversation, multiple members indicate multiple rounds of conversation, for example: · A single member example, “messages”: [ {“role”: “user”,”content”: “Hello”}] · A three-member example, “messages”: [ {“role”: “user”,”content”: “Hello”},{“role”:”assistant”,”content”:”How can I help you?”},{“role”:”user”,”content”:”Please introduce yourself”}] (2) The last message is the current request information, and the previous messages are historical conversation information (3) Role explanation for messages: ① The role of the first message must be either user or system ② The role of the last message must be either user or tool ③ If the function call feature is not used: · When the role of the first message is user, the role value needs to be alternately user -> assistant -> user…, i.e., the role of messages with odd indices must be user or function, and the role of messages with even indices must be assistant, for example, in the sample, the role values of the messages are respectively user, assistant, user, assistant, user; the role values of messages at odd indices (red box) are user, i.e., the roles of messages 1, 3, and 5 are user; messages at even indices (blue box) have the role assistant, i.e., the roles of messages 2, 4 are assistant |

| stream | bool | No | Whether to return data in the form of a streaming interface. Explanation: (1) Beam search model can only be false (2) Default false |

| stream_options | stream_options | No | Whether the usage is output in a streaming response. Explanation: true: Yes, when set to true, a field will be output in the last chunk, showing the token statistics for the entire request; false: No, the streaming response does not output usage by default |

请求示例

curl --location 'https://deepseek.modelverse.cn/v1/chat/completions' \

--header 'Authorization: Bearer <Your API Key>' \

--header 'Content-Type: application/json' \

--data '{

"reasoning_effort": "low",

"stream": true,

"model": "deepseek-ai/DeepSeek-R1",

"messages": [

{

"role": "user",

"content": "say hello to ucloud"

}

]

}'响应

响应参数

| 名称 | 类型 | 描述 |

| id | string | The unique identifier of this request, can be used for troubleshooting |

| object | string | Package type chat.completion: Multi-turn conversation return |

| created | int | Timestamp |

| model | string | Description: (1) If it is a pre-set service, the model ID is returned (2) If it is a service deployed after sft, this field returns model:modelversionID, where model is the same as the requested parameter and is the large model ID used in this request; modelversionID is used for tracing |

| choices | choices/sse_choices | Returned content when stream=false Returned content when stream=true |

| usage | usage | Token statistics information. Explanation: (1) Synchronous requests return by default (2) Streaming requests do not return by default. When stream_options.include_usage=true is enabled, the actual content will be returned in the last chunk, and other chunks will return null |

| search_results | search_results | Search results list |

响应示例

{

"id": " ",

"object": "chat.completion",

"created": ,

"model": "models/DeepSeek-R1",

"choices": [

{

"index": 0,

"message": {

"role": "assistant",

"content": "\n\nHello, UCloud Global! 👋 If there's anything specific you'd like to know or discuss about UCloud Global's services (like cloud computing, storage, AI solutions, etc.), feel free to ask! 😊",

"reasoning_content": "\nOkay, the user wants to say hello to UCloud Global. Let me start by greeting UCloud Global directly.\n\nHmm, should I mention what UCloud Global is? Maybe a brief intro would help, like it's a cloud service provider.\n\nThen, I can ask if there's anything specific the user needs help with regarding UCloud Global services.\n\nKeeping it friendly and open-ended makes sense for a helpful response.\n"

},

"finish_reason": "stop"

],

"usage": {

"prompt_tokens": 8,

"completion_tokens": 129,

"total_tokens": 137,

"prompt_tokens_details": null,

"completion_tokens_details": null

},

"system_fingerprint": ""

}错误代码

如果请求不正确,服务器返回的JSON文本将包含以下参数

| HTTP 状态码 | 类型 | 错误代码 | 错误信息 | 描述 |

| 400 | invalid_request_error | invalid_messages | Sensitive information | Sensitive message |

| 400 | invalid_request_error | characters_too_long | Conversation token output limit | Currently, the maximum max_tokens supported by the deepseek series model is 12288 |

| 400 | invalid_request_error | tokens_too_long | Prompt tokens too long | [User Input Error] The request content exceeds the internal limit of the large model. You can try the following methods to solve it: • Shorten the input appropriately |

| 400 | invalid_request_error | invalid_token | Validate Certification failed | Invalid bearer token. Users can refer to [Authentication Explanation] to get the latest key |

| 400 | invalid_request_error | invalid_model | No permission to use the model | No model permissions |

老刘博客

老刘博客